Day 64 (of 2025/26) Thinkings on grade inflation and comparing grad rates with standardized exam results…

Two topics have been irking me on social media – in particular on the former edutwitter environment – lots of people pushing back on ‘grade inflation = bad reading’ and ‘increased grad results = horrible SAT achievement (among others).

I got thoughts.

Grade Inflation – not going to argue around whether or not students should progress with their age grouping – as long as our system continues to organize learning cohorts by year of birth… that should continue until senior years (in my context, thing shift as students enter the grade 10-12 graduation pathway on their way to become ‘educated citizens’). As for what we do with them with the 180 years per grade year… that ought to be left to (again in our context) teacher autonomy to assess and evaluate where students are, and what their next steps ought to be.

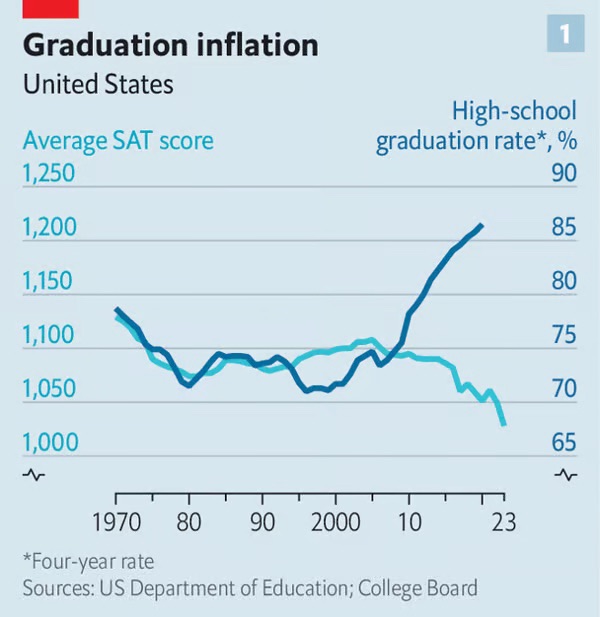

But on some of the media spheres, a graph has been shared and critiqued:

Grad rates going up, while SAT scores (media portrayed as ‘reading’ and mathing) go down… just funny that people are counting on things like the SAT – a multiple choice/essay dominated methodology to be comparable to classrooms doing a multitude of other activities that are more engaging and relevant and lead to deep learning as opposed to short term focused strategies needed for multiple choice responses and that epic of the 17th century: the essay.

Is it grade inflation when students are succeeding in less archaic ways of showing learning? Is it fair to the students to then have to take an unfamiliar test to then confirm that they have knowledge and skills? When… when we know that indeed there are skills people can learn to do well in tests in this format without having the theorized necessary knowledge – heck, there are industries around how to take on tests like the SAT… and if you don’t think cheating is a subset of this industry… well, go right ahead thinking that…

Exams like the SAT (and I’ll include PISA – having had a cohort write the test) made me think of the BC Foundation Skills Assessments I just was part of a team assessing – well crafted and likely the best tests students will write in their learning career – very few (haha – if any because of how politicized these assessments have become over the decades) classrooms do practice sets, have questions field tested, norm referenced and have accountability for each possible response so that when doing item by item analysis they can use the feedback to give them direction on what the learner may need to be successful upon a redo.

There are some positives when these measurements are mindfully done. But we can’t pretend that a test necessarily measures how much of a grade a student should earn when there are so many different(iated) ways to archive, capture and present evidence of knowing and doing.

Standardized Tests are not something I am directly against. There are many good things that come out of some of them – like the Woodcock Johnson… but those are not meant ‘for all’… and it’s why I’m also conflicted about reading assessments: ought they be assigned to students based on their independent level or ‘expected grade/age level’… I’m comfortable with ‘why not some of each’ ~ but I also appreciate the information that no/wrong answers can also provide!

Love it when those tests are mindfully used… skeptical when they are part of an entrance/exit process… but probably because my bias was laid in early when I was exempted from the old system of ‘provincial exams’ that once were universal… I got an aggregate mark instead and things ended up working out okay (depending on whom you talk with).

So when people start combining them… I not believe two wrongs make things right. It’s like me tracking attendance to determine if someone can cross the graduation stage. I can argue that one might impact the other, but really… two things with so many variables and neither really looking at the important part – the learning journey.

Just because you put two graphs together… gotta consider not only correlation, but causation, and also coincidence. As we better unpack neurological differences , it doesn’t surprise me that we enable more students to graduate (with the added pressure to graduate ‘on time’ according to a very biased social/conditional presumptions). We aren’t expecting a fish to show their best ability by climbing a tree

And fewer students succeeding at the SAT when many are trying to unpack the fragile nature of ‘testing’ AND some jurisdictions are burning out the interest in completing ‘those tests’ that take up so much prep time and don’t connect with their day-to-day experiences AND there is more wonders if going to those universities is really worth the cost to earn the credentials AND the jobs that were once promised are becoming fewer and fewer while new occupations, ones not found via universities, are starting to show different post-secondary avenues to follow – so write the SAT to see how you do, but don’t pay the prep programs all the money (especially when places like the US see the legacy families are getting more of the prime university spots anyways right now… less and less about ‘earning a spot’ than ensuring your ancestors arranged you a spot since programs promoting DEI and affirmative action were removed… ).

SAT shows how well a student does on a test. Graduation rate shows how well the students played the game of school.

Both have issues. Let’s unpack some of those bias and acknowledge the system is implicit in creating what it is. Ooh – Will Richardson has another Confronting Education cohort coming up in February… take a dive and learn with him! Mention my name for a 10% discount!

Leave a comment